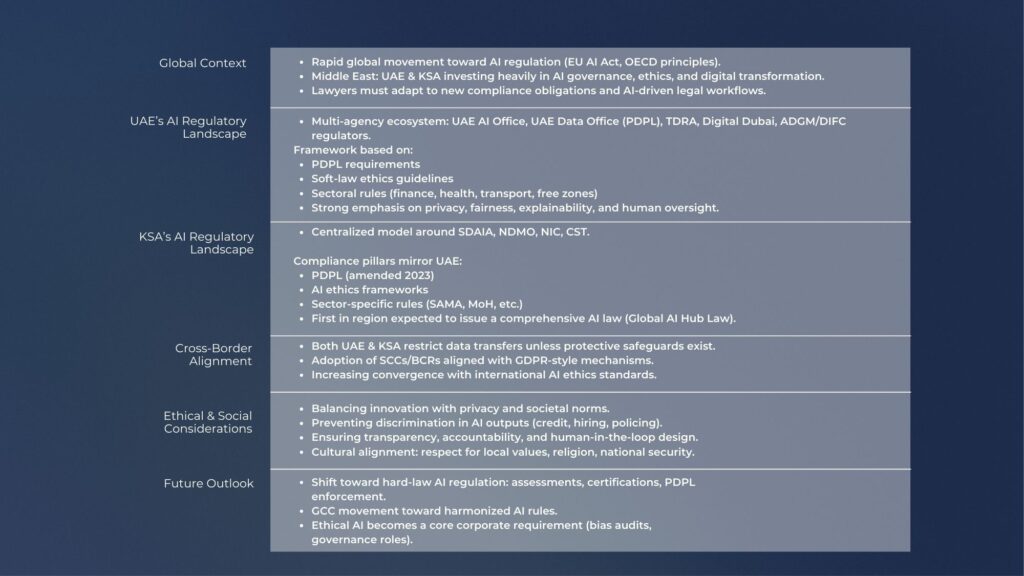

Artificial Intelligence (AI) has leapt from an emerging technology to a strategic pillar of economic, social, and governmental transformation across the Gulf. Both the United Arab Emirates (UAE) and the Kingdom of Saudi Arabia (KSA) have integrated AI into their national visions, targeting sustainable growth, digital leadership, and global competitiveness. Governing AI is not just about vision; it is about legal frameworks that balance innovation with ethics, security, and individual rights.

In this context, the regulatory landscapes in the UAE and KSA are evolving rapidly, blending legal structures, ethical principles, sector-specific guidance, and forward-looking frameworks that prepare the region for the next generation of digital transformation.

The UAE: A Multi-Layered Regulatory Ecosystem

In the UAE, AI governance is not yet encapsulated in one single AI statute but is governed through a combination of data protection laws, ethical principles, guidelines, and strategic national policies.

Strategic Foundations

The UAE was one of the first countries globally to institutionalise AI at the federal level. In 2017, it appointed a Minister of State for Artificial Intelligence and introduced the National Strategy for Artificial Intelligence 2031, which places AI at the heart of economic competitiveness and public service delivery.

Legal and Compliance Landscape

While there is no stand-alone AI law covering all AI systems, AI is regulated through existing legal frameworks, especially those related to personal data and privacy. The Federal Personal Data Protection Law (PDPL): Federal Decree-Law No. 45 of 2021 governs how personal data, often used in AI training and processing, must be collected, processed, and stored. It includes consent requirements and safeguards aligned with international norms. Free Zone Data Regulations in financial free zones such as the Dubai International Financial Centre (DIFC) and Abu Dhabi Global Market (ADGM) apply bespoke data regimes, some of which include AI-specific components.

Ethics and Principles

Overlaying the legal structures are ethical AI principles that emphasise transparency, fairness, explainability, accountability, privacy, and alignment with human values. The UAE’s AI Principles and Ethics framework articulates these core standards, reinforcing trust and safety in AI deployment across sectors.

Sector-Specific Guidance

Regulators in healthcare, finance, and transportation are increasingly issuing targeted guidance to ensure AI in sensitive contexts adheres to both general law and industry standards.

Saudi Arabia: Strategic Growth with Emerging Regulation

Saudi Arabia’s approach to AI law is similarly progressive but distinctive in its structure. With AI designated a strategic priority under Vision 2030, the Kingdom is building an ecosystem that promotes innovation while embedding ethical and governance guardrails.

Central Governance: SDAIA

The Saudi Data and Artificial Intelligence Authority (SDAIA) serve as the national reference body for data and AI strategy. Established by royal decree in 2019, SDAIA coordinates AI policy, data governance, and ethical guidelines across government and industry.

Legal Backdrop and Ethics

Currently, AI activities in Saudi Arabia are regulated through a combination of related laws and influential non-binding frameworks. The Personal Data Protection Law (PDPL) became effective in 2023 and sets rules for data controllers, processing conditions, breach notification timelines, and fines. SDAIA has issued AI ethics guidelines emphasising fairness, transparency, accountability, and human oversight. Specific guidance on emerging technologies, such as large language models, clarifies content authenticity and governance expectations.

Draft and Forthcoming AI Law

Saudi Arabia is developing a dedicated AI regulatory framework to support its position as a global AI innovation hub, foster investment in advanced digital technologies, enable scalable sovereign data infrastructure, and potentially introduce jurisdictional models for foreign AI services within defined legal structures. This anticipated law signals the Kingdom’s intention to create a more holistic AI-specific legal framework that complements broader economic and digital goals.

Comparatives

| Aspect | UAE | Saudi Arabia |

| AI Law in Force | No single comprehensive AI law; regulated via PDPL, ethical principles, sector rules | No current standalone AI law: PDPL and ethics guidelines apply; draft AI law in development |

| Data Protection | Federal PDPL plus Free Zone regulations | PDPL 2023 with strict local requirements |

| Ethics and Governance | National ethical principles and strategic framework | SDAIA ethics principles and adoption guidance |

| Innovation Focus | Early adopter with strategic integration across sectors | Strategic emphasis on AI hub status and robust regulations |

Key Themes in AI Regulation

Innovation with Responsibility

Regulators emphasise the importance of maintaining technological advancement while embedding ethical safeguards against bias, opacity, and misuse.

Data Privacy as Regulatory Foundation

Because AI depends heavily on data, personal data protection regimes like PDPL are the legal cornerstone of AI governance in both the UAE and Saudi Arabia.

Sector-Driven Oversight

Healthcare, finance, and critical infrastructure have tailored guidance reflecting risk and public interest.

Toward Unified AI Laws

Both nations are moving toward more formal AI regulatory frameworks, signalling that current structures are transitional stages in a rapidly evolving legal environment.

Implications for Businesses and Practitioners

Organisations deploying or developing AI solutions in the UAE or Saudi Arabia must ensure PDPL compliance for any personal data processing, adopt ethical AI practices aligned with national principle frameworks, monitor regulatory developments, including Saudi Arabia’s forthcoming AI law, and implement governance, risk, and compliance frameworks that embed AI lifecycle oversight and accountability.

The UAE and Saudi Arabia are shaping AI governance with strategic, layered frameworks that encourage innovation, protect individual rights, and foster ethical AI ecosystems. While neither country has yet enacted a comprehensive, standalone AI law, the combination of data protection legislation, ethical principles, draft laws, and sector guidance offers a robust, rapidly maturing regulatory environment. Staying informed and proactive in compliance is a key differentiator for businesses operating across the Gulf.

In summary: